News release

From:

New vision system can see motion faster than humans

A computer-vision system can detect movement in visual scenes faster than humans and up to four times faster than existing approaches, according to research published in Nature Communications. This technology could help make autonomous systems, such as self-driving cars and small robots, safer and more responsive in fast-changing environments.

Computer-vision systems process visual data by estimating the motion of objects within a scene, a process known as optical flow. However, performing optical-flow analysis of real-world motion is often constrained by the large amounts of computing power needed to process visual data in real time. Biological eyes account for this challenge by dynamically focusing on regions in which movement is occurring.

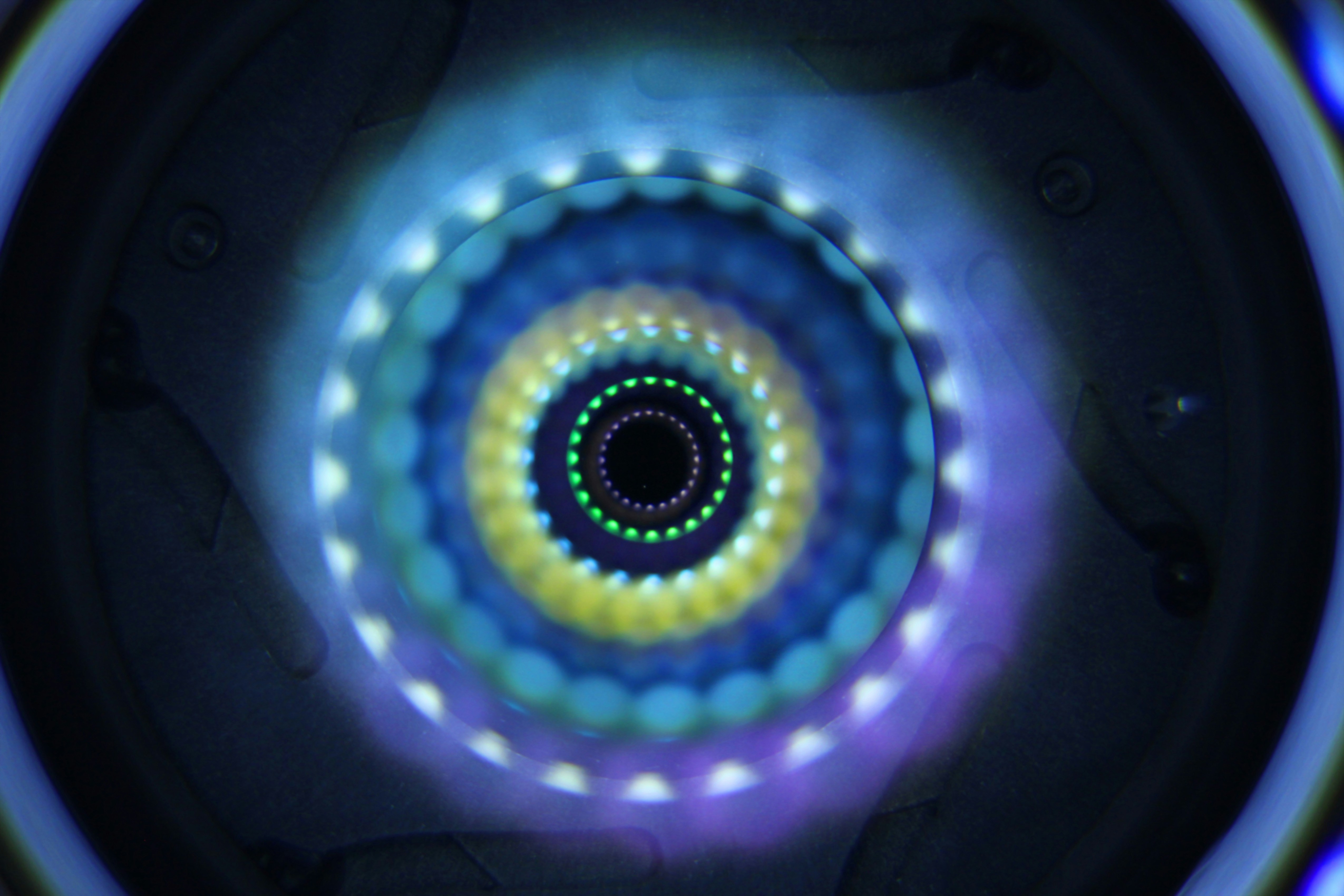

Shengbo Wang and colleagues replicate biological visual perception using electronic hardware in conjunction with downstream optical-flow calculations. The hardware is built on artificial synaptic transistors that identify areas where motion occurs via brightness changes, thereby avoiding the processing of regions that are static. These ‘regions of interest’ are then sent to conventional vision algorithms for further analysis. The researchers tested the system across a variety of scenarios, including vehicle operation, unmanned aerial vehicles, and robotic arms. The new system processed scenes approximately 400% faster than existing methods while maintaining or improving accuracy. Additionally, the hardware was able to surpass human-level processing speeds in most use cases.

Facilitated processing of real-time visuals could enable autonomous systems to engage efficiently with complex tasks, such as collision avoidance and object tracking. Further research is needed to evaluate the vision system within a diverse range of environments.

International

International