News release

From:

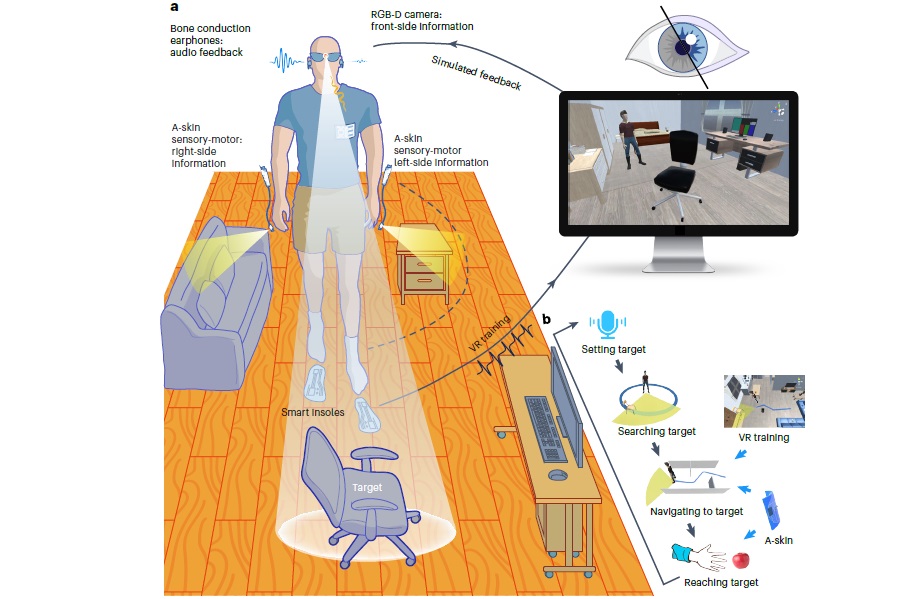

Biomedical engineering: A wearable AI system to help blind people navigate *VIDEOS*

A wearable system designed to assist navigation for blind and partially sighted people is presented in Nature Machine Intelligence. This system uses artificial intelligence (AI) algorithms to survey the environment and send signals to the wearer as they approach an obstacle or object.

Wearable electronic visual assistance systems offer a promising alternative to medical treatments and implanted prostheses for blind and partially sighted people. These devices convert visual information from the environment into other sensory signals to assist with daily tasks. However, current systems are difficult to use and this has hindered widespread adoption.

Leilei Gu and colleagues present a wearable visual assistance system that can provide direction via voice commands. The authors developed an AI algorithm that processes video from a camera in the device to determine an obstacle-free route for the user. Signals about the environment in front of the user can be sent to them via bone conduction headphones. They also created stretchable artificial skins to be worn on the wrists, which send vibration signals to the user to guide the direction of movement and avoid lateral objects. The authors tested the device with humanoid robots and blind and partially sighted participants in both virtual and real-world environments. They observed significant improvements in navigation and post-navigation tasks among the participants, such as their ability to avoid obstacles when getting through a maze and reaching and grasping an object.

The findings suggest that the integration of visual, audio and haptic senses can enhance the usability and functionality of visual assistance systems. Future research should focus on refining the system further and exploring its potential applications in other areas of assistive technology.

International

International