News release

From:

Artificial intelligence: Designing agents that can communicate and cooperate in Diplomacy

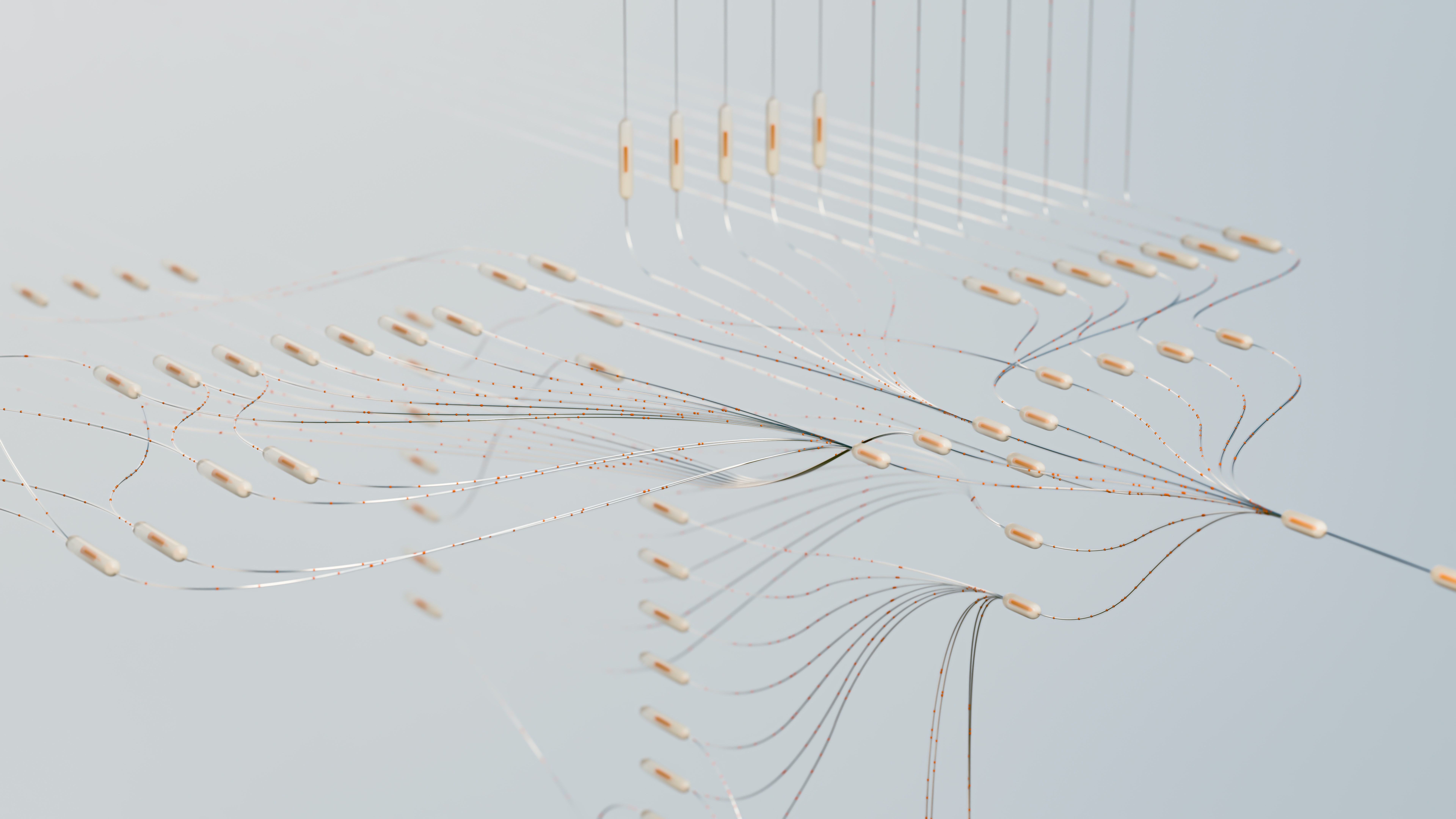

Artificial intelligence (AI) agents that can negotiate and form agreements, allowing them to outperform other agents without this ability in the board game Diplomacy, are reported in a Nature Communications paper. The findings demonstrate a deep reinforcement learning approach for modelling agents that can communicate and cooperate with other artificial agents to make joint plans when playing the game.

Developing AI that can demonstrate cooperation and communication between agents is important. Diplomacy is a popular board game that offers a useful test bed for such behaviour, as it involves complex communication, negotiation and alliance formation between the players, which have been long-lasting challenges for AI to achieve. To play successfully, Diplomacy requires reasoning about concurrent player future plans, commitments between players and their honest cooperation. Previous AI agents have achieved success in single-player or competitive two-player games without communication between players.

János Kramár, Yoram Bachrach and colleagues designed a deep reinforcement learning approach that enables agents to negotiate alliances and joint plans. The authors created agents that model game players and form teams that try to counter the strategies of other teams. The learning algorithm allows agents to agree future moves and identify beneficial deals by predicting possible future game states. Moving towards human-level performance, the authors also investigated the conditions for honest cooperation, by examining some broken commitment scenarios between the agents, where agents deviate from past agreements.

The findings help form the basis of flexible communication mechanisms in AI agents that enable them to adapt their strategies to their environment. Additionally, the findings show how the inclination to sanction peers who break contracts dramatically reduces the advantage of such deviators, and helps foster mostly truthful communication, despite conditions that initially favour deviations from agreements.

International

International