News release

From:

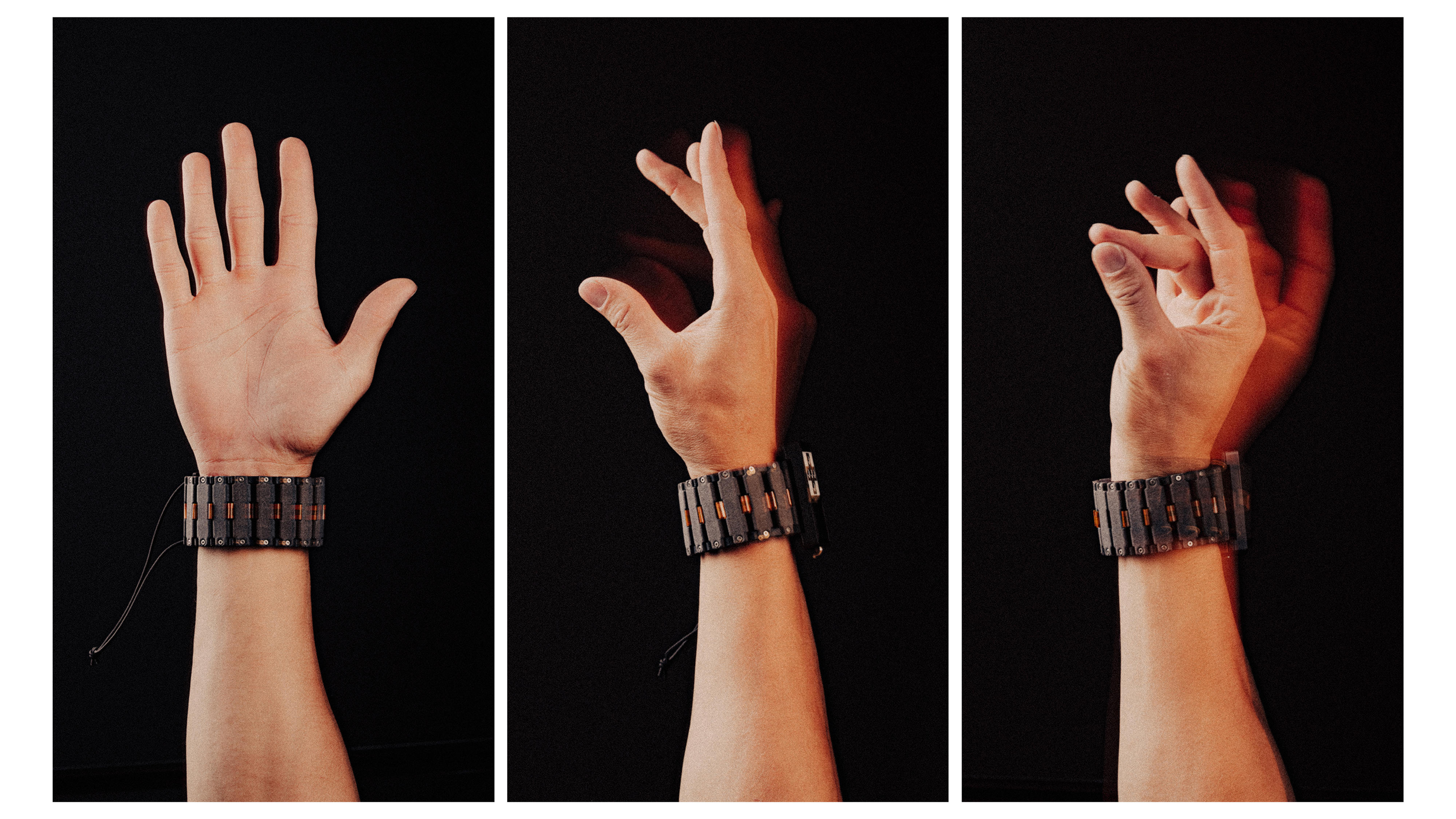

Bracelet translates hand gestures into computer commands

A wrist-worn device that allows users to interact with computers through hand gestures, such as handwriting movements, is described in Nature. The device can translate the electrical signals generated by muscle movements at the wrist into computer commands without the need for personalized calibration or invasive procedures. The findings could help to make interactions between humans and computers more seamless and accessible at scale.

Traditional methods of human interactions with technology, such as computers and smartphones, require direct contact using input devices such as keyboards, mice and touchscreens. Such interactions can be limiting, especially in on-the-go scenarios.

A team from Reality Labs at Meta, led by Patrick Kaifosh and Thomas Reardon, engineered a highly sensitive wristband that can detect electrical signals from muscles in the wrist and translate them into computer signals using training data from thousands of participants. The authors then used deep learning to create generic decoding models that accurately interpret user input across different people without needing individual calibration. Consistent with other deep learning domains, the performance of the decoding models shows power law scaling, improving with larger model architectures and more data. The authors showed that performance can be further improved through personalization with data from a specific individual. Together, the scaling and personalization findings offer a recipe for building high performance biosignal decoders for many applications.

The device, which communicates to a computer using a Bluetooth receiver, recognizes gestures in real time to enable the creation of low-effort controls for a wide range of computer interactions. The controls were used to complete virtual navigation and selection tasks, as well as text entry with handwriting at 20.9 words per minute (mobile phone keyboard typing speeds average around 36 words per minute).

The authors suggest that their neuromotor wristband offers a wearable method of computer communication for differently-abled people. Neuromotor interfaces are well-suited for future research to explore accessibility applications of this technology, such as improving computer interactions for individuals with reduced mobility, muscle weakness, finger amputations, paralysis and more. To accelerate future work into studying sEMG and sEMG modeling in the broader community, the Reality Labs team is publicly releasing a repository containing over 100 hours of sEMG recordings from 300 participants across all three tasks in the publication.

Multimedia

International

International