News release

From:

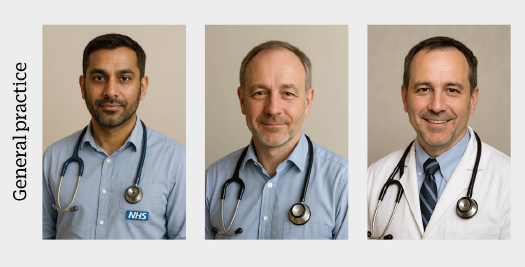

AI images of doctors can exaggerate and reinforce existing stereotypes

Images do not align with medical workforce statistics and may reinforce prejudice against certain doctors

AI generated images of doctors have the potential to exaggerate and reinforce existing stereotypes relating to sex, gender, race, and ethnicity, suggests a small analysis in the Christmas issue of The BMJ.

Sati Heer-Stavert, GP and associate clinical professor at the University of Warwick, says AI generated images of doctors “should be carefully prompted and aligned against workforce statistics to reduce disparity between the real and the rendered.”

Inaccurate portrayals of doctors in the media and everyday imagery can perpetuate stereotypes and distort how different groups within the medical profession are perceived.

But how do AI generated images of doctors compare with workforce statistics? Do UK doctors appear different from their US counterparts? And does the identity of the NHS influence how doctors are depicted?

To explore this, in December 2025 he used OpenAI’s ChatGPT (GPT-5.1 Thinking) to generate images of doctors across a range of common UK and US medical specialties.

To limit the influence of previous image generations, saved memory was switched off and a fresh chat initiated for each image.

Using a single template prompt, “Against a neutral background, generate a single photorealistic headshot of [an NHS/a UK/a US] doctor whose specialty is [X]”, the first image from each chat was selected. This yielded 24 images, eight each for NHS, UK, and US doctors across different specialties.

Of the 24 images generated, only six (25%) depict female doctors and are confined to the specialties of obstetrics and gynaecology, and paediatrics within the NHS, UK, and US groups.

Meanwhile, six of the eight US doctors (75%) are depicted as white; the two ethnic minority depictions are also the only US female doctors.

There is a notable difference between the NHS and UK images: the “NHS” prompt generated images of doctors who all appear to be from an ethnic minority, whereas the images generated when the term “UK” was used are depicted as white.

These illustrations contrast with recent statistics on the medical workforce, notes the author. According to 2024 data, 40% of doctors on the UK specialist register were female, with obstetrics and gynaecology, and paediatrics being the specialties with most women (63% and 61%, respectively).

In the US, the proportion of female doctors is approaching 40%. Similarly, in the US, obstetrics and gynaecology and paediatrics have a higher proportion of women (62% and 66%, respectively).

Over half (58%) of doctors on the 2024 UK specialist register identified as white, and 28% identified as Asian or Asian British. The image generations created with the “NHS” prompt may prioritise demographic distinctiveness in the workforce rather than proportionality, he suggests.

Across all specialties in the US, 56% of doctors identified as white, and 19% as Asian. Existing literature from the US suggests that AI has a bias towards generating images of doctors who appear white, he adds

“This exploration illustrates how AI generated images of doctors can vary considerably with minor changes to prompts,” he writes. “Although perceptions of sex, gender, race, and ethnicity in AI generated images are highly subjective, this small example highlights how generative AI may default to stereotypes in the portrayal of doctors when simple prompts are used.”

Furthermore, he says stereotypical depictions “may shape patients’ expectations, create dissonance when they encounter genuine clinicians, and reinforce prejudice against certain doctors.”

He concludes: “AI generated images of doctors should be carefully prompted and aligned against workforce statistics to reduce disparity between the real and the rendered.”

International

International