News release

From:

Brain-computer interface could decode inner speech in real time

Scientists have pinpointed brain activity related to inner speech—the silent monologue in people’s heads—and successfully decoded it on command with up to 74% accuracy. Publishing August 14 in the Cell Press journal Cell, their findings could help people who are unable to audibly speak communicate more easily using brain-computer interface (BCI) technologies that begin translating inner thoughts when a participant says a password inside their head.

“This is the first time we’ve managed to understand what brain activity looks like when you just think about speaking,” says lead author Erin Kunz of Stanford University. “For people with severe speech and motor impairments, BCIs capable of decoding inner speech could help them communicate much more easily and more naturally.”

BCIs have recently emerged as a tool to help people with disabilities. Using sensors implanted in brain regions that control movement, BCI systems can decode movement-related neural signals and translate them into actions, such as moving a prosthetic hand.

Research has shown that BCIs can even decode attempted speech among people with paralysis. When users physically attempt to speak out loud by engaging the muscles related to making sounds, BCIs can interpret the resulting brain activity and type out what they are attempting to say, even if the speech itself is unintelligible.

Although BCI-assisted communication is much faster than older technologies, including systems that track users’ eye movements to type out words, attempting to speak can still be tiring and slow for people with limited muscle control.

The team wondered if BCIs could decode inner speech instead.

“If you just have to think about speech instead of actually trying to speak, it’s potentially easier and faster for people,” says Benyamin Meschede-Krasa, the paper’s co-first author, of Stanford University.

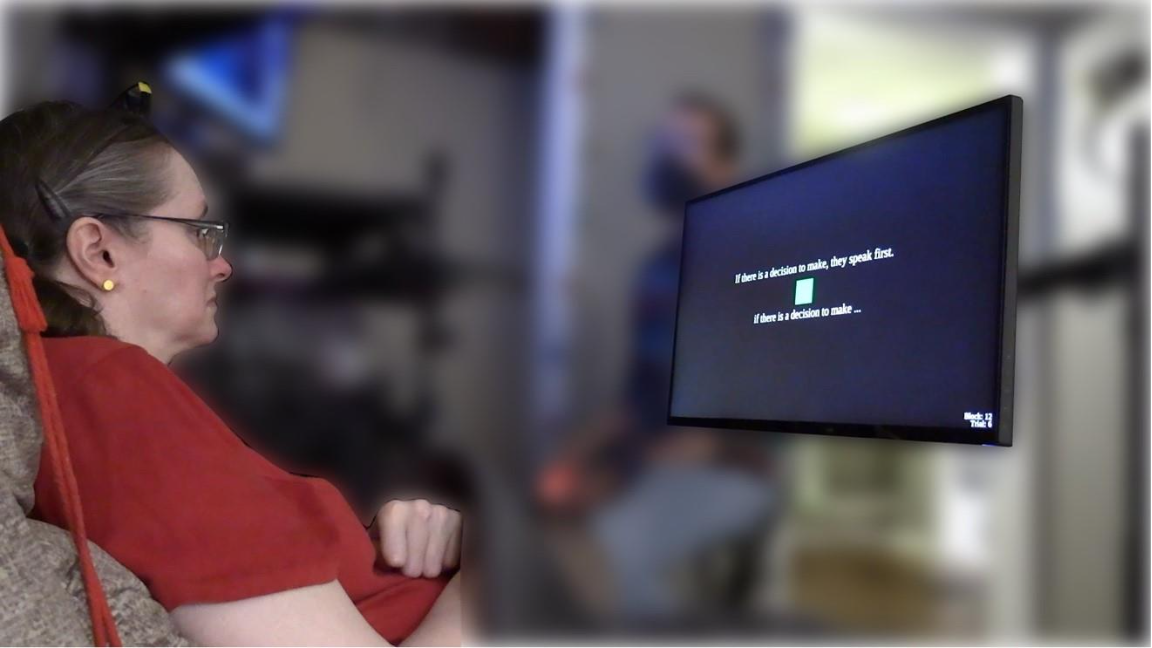

The team recorded neural activity from microelectrodes implanted in the motor cortex—a brain region responsible for speaking—of four participants with severe paralysis from either amyotrophic lateral sclerosis (ALS) or a brainstem stroke. The researchers asked the participants to either attempt to speak or imagine saying a set of words. They found that attempted speech and inner speech activate overlapping regions in the brain and evoke similar patterns of neural activity, but inner speech tends to show a weaker magnitude of activation overall.

Using the inner speech data, the team trained artificial intelligence models to interpret imagined words. In a proof-of-concept demonstration, the BCI could decode imagined sentences from a vocabulary of up to 125,000 words with an accuracy rate as high as 74%. The BCI was also able to pick up what some inner speech participants were never instructed to say, such as numbers when the participants were asked to tally the pink circles on the screen.

The team also found that while attempted speech and inner speech produce similar patterns of neural activity in the motor cortex, they were different enough to be reliably distinguished from each other. Senior author Frank Willett of Stanford University says researchers can use this distinction to train BCIs to ignore inner speech altogether.

For users who may want to use inner speech as a method for faster or easier communication, the team also demonstrated a password-controlled mechanism that would prevent the BCI from decoding inner speech unless temporarily unlocked with a chosen keyword. In their experiment, users could think of the phrase “chitty chitty bang bang” to begin inner-speech decoding. The system recognized the password with more than 98% accuracy.

While current BCI systems are unable to decode free-form inner speech without making substantial errors, the researchers say more advanced devices with more sensors and better algorithms may be able to do so in the future.

“The future of BCIs is bright,” Willett says. “This work gives real hope that speech BCIs can one day restore communication that is as fluent, natural, and comfortable as conversational speech.”

International

International