News release

From:

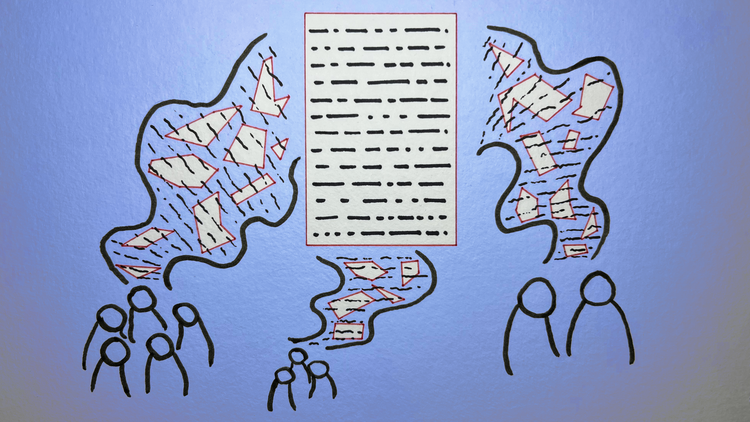

Truth be told – Should large language models be legally obligated to tell the truth? Researchers examined whether a legal duty for AI to be truthful already exists and whether it would be feasible. Current frameworks were found to be limited and sector-specific. The authors propose a pathway to ‘create a legal truth duty for providers of narrow- and general-purpose LLMs’.

International

International