News release

From:

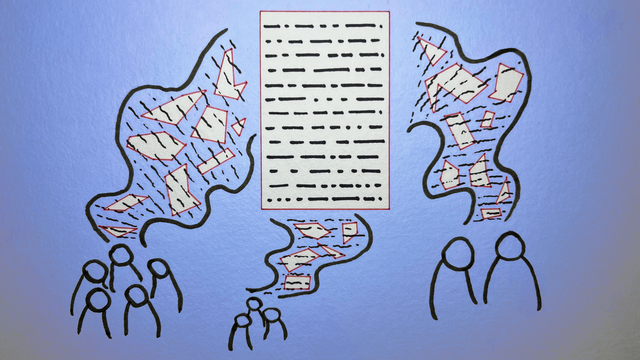

AI chatbots overgeneralise when asked to summarise scientific papers, creating “significant risk of large-scale misinterpretations of research findings”. Analysis of 4,900 chatbot-generated summaries of papers, spanning coffee’s health benefits to climate change beliefs, found some chatbots were nearly five times more likely to overgeneralise findings than human summaries. Ironically, prompting for accuracy increased this tendency. The researchers call for stronger safeguards and highlight potential mitigation strategies, like benchmarking large language models for accuracy.

International

International